|

Kan Jen Cheng I'm a research assistant at UC Berkeley. Currently, I am doing audio-visual research in Berkeley Speech Group (BAIR), advised by professor Gopala Anumanchipalli. My research interests lie at the intersection of audio-visual learning, multi-modal perception, and generative AI. While recent advancements in LLMs have mastered language, I view text as a reduction of reality. Instead, I aim to build multi-modal systems that perceive the world through the synergy of sight and sound with spatial awareness and physical grounding. |

|

Research PhilosophiesMy work is driven by two core philosophies: human-centered perception, where I model speech characteristics, affective dynamics, and joint cognitive attention to capture how humans naturally experience the world; and creative media, where I develop tools that offer precise, object-level control for content creation. Future DirectionsAs an audio-visual researcher, I have witnessed the power of multimodal synergy, yet I realize that correlation alone is insufficient. True perception requires understanding the physical laws, such as geometry, dynamics, and material interactions, that govern the spaces where sight and sound coexist. Consequently, my future research will focus on spatial and physical learning to contribute to the development of a comprehensive world model. I aim to move beyond surface-level alignment to construct digital twins that not only mimic the appearance of the environment but also simulate its underlying physical reality. By grounding audio-visual generation in these physical truths, we can enable agents to reason about the world through a unified sensory experience. Research |

|

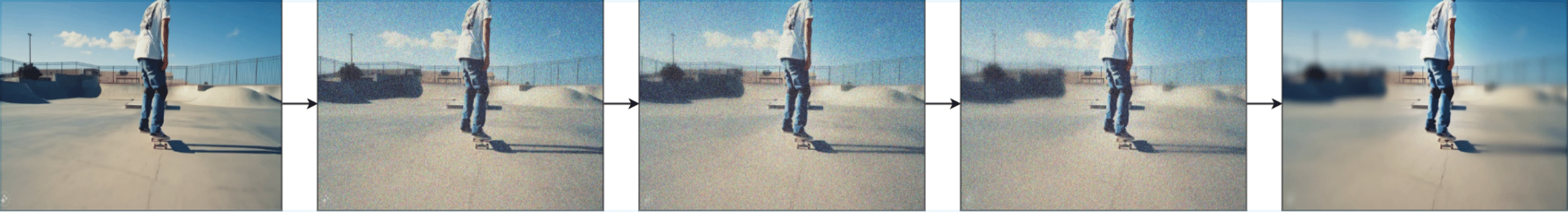

CAVE: Coherent Audio-Visual Emphasis via Schrödinger Bridge

Kan Jen Cheng*, Weihan Xu*, Koichi Saito, Nicholas Lee, Yisi Liu, Jiachen Lian, Tingle Li, Alexander H. Liu, Fangneng Zhan, Masato Ishii, Takashi Shibuya, Paul Pu Liang, Gopala Anumanchipalli under review Collaborate with Sony AI & MIT Media Lab project page / arXiv A realization of human's audio-visual selective attention that jointly emphasizes the selected object visually and acoustically based on flow-based Schrödinger bridge. |

|

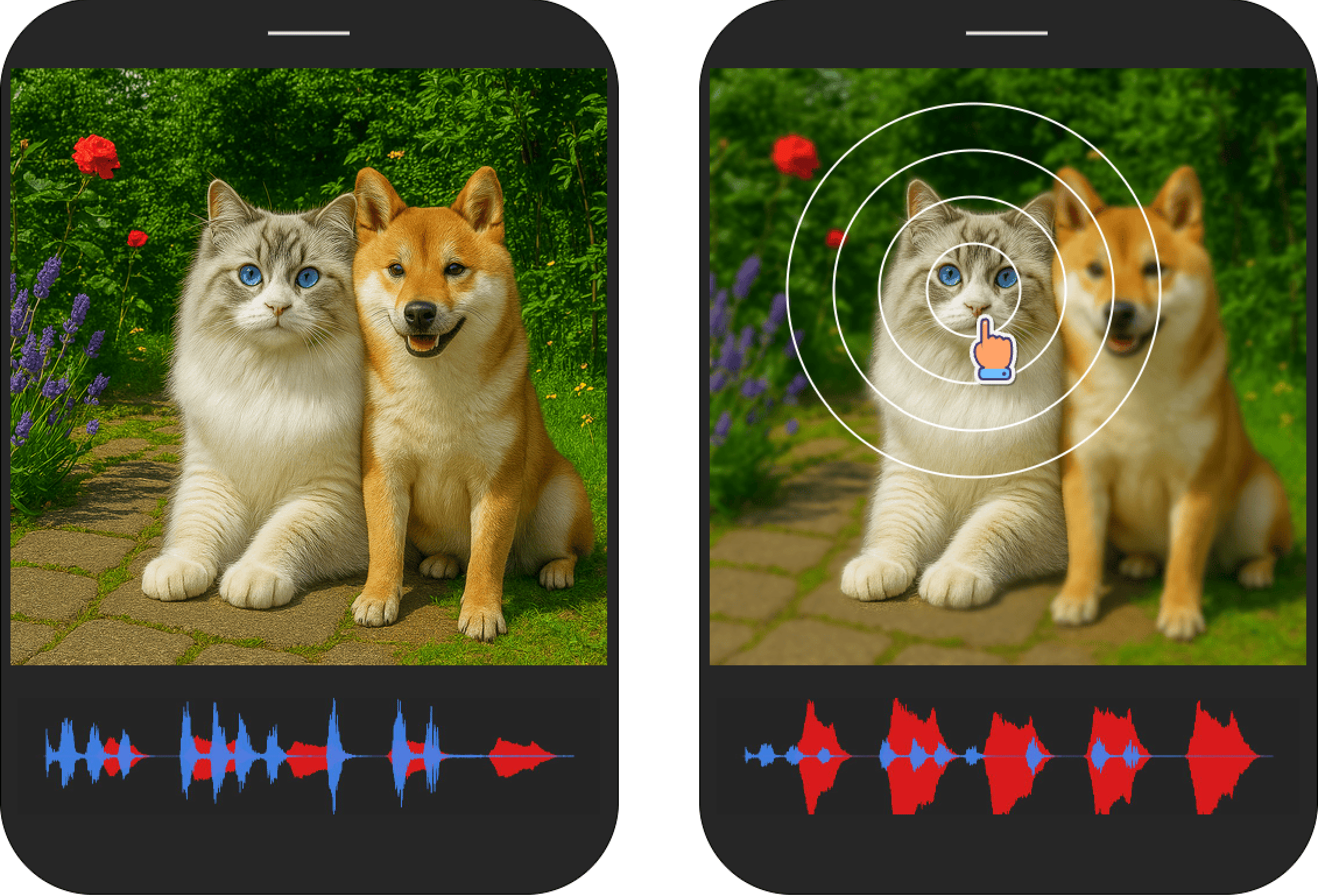

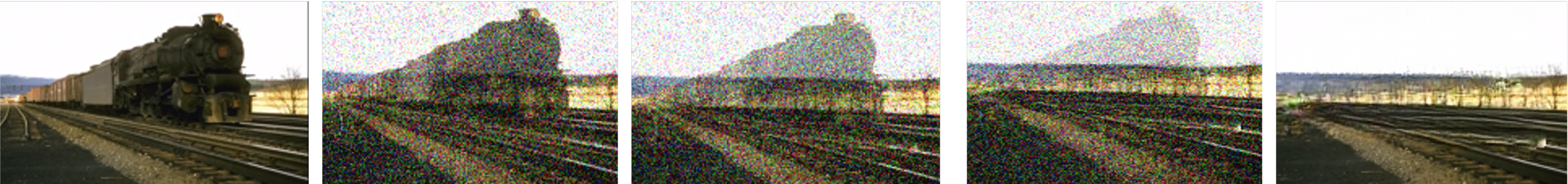

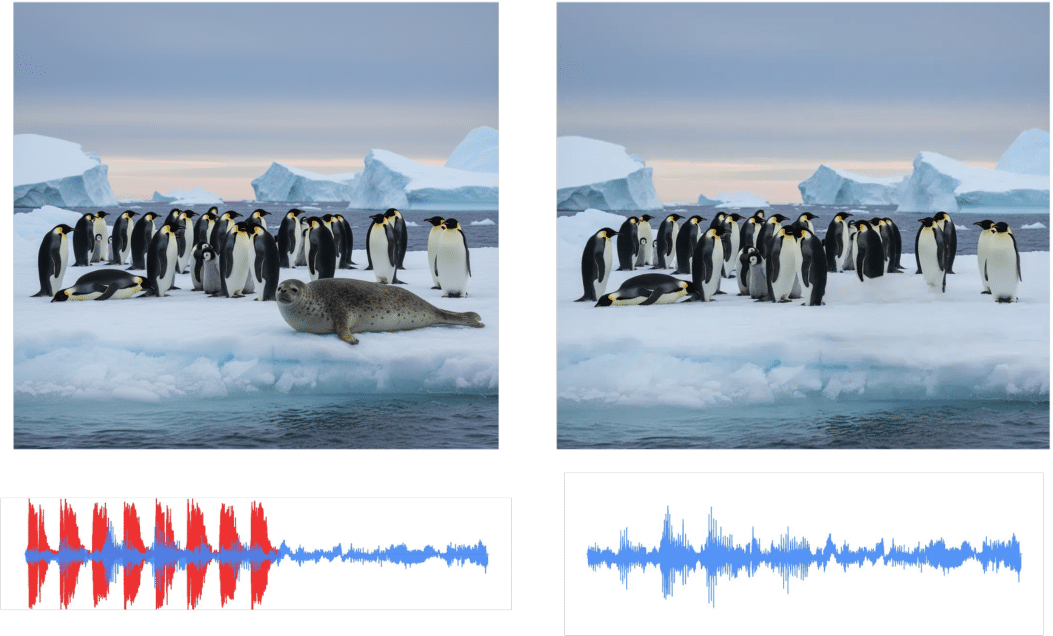

Schrödinger Audio-Visual Editor: Object-Level Audiovisual Removal

Weihan Xu*, Kan Jen Cheng*, Koichi Saito, Muhammad Jehanzeb Mirza, Tingle Li, Yisi Liu, Alexander H. Liu, Liming Wang, Masato Ishii, Takashi Shibuya, Yuki Mitsufuji, Gopala Anumanchipalli, Paul Pu Liang under review Collaborate with Sony AI & MIT Media Lab project page / arXiv A text-guided joint audio-visual texture editing model based on flow-based Schrödinger bridge. |

|

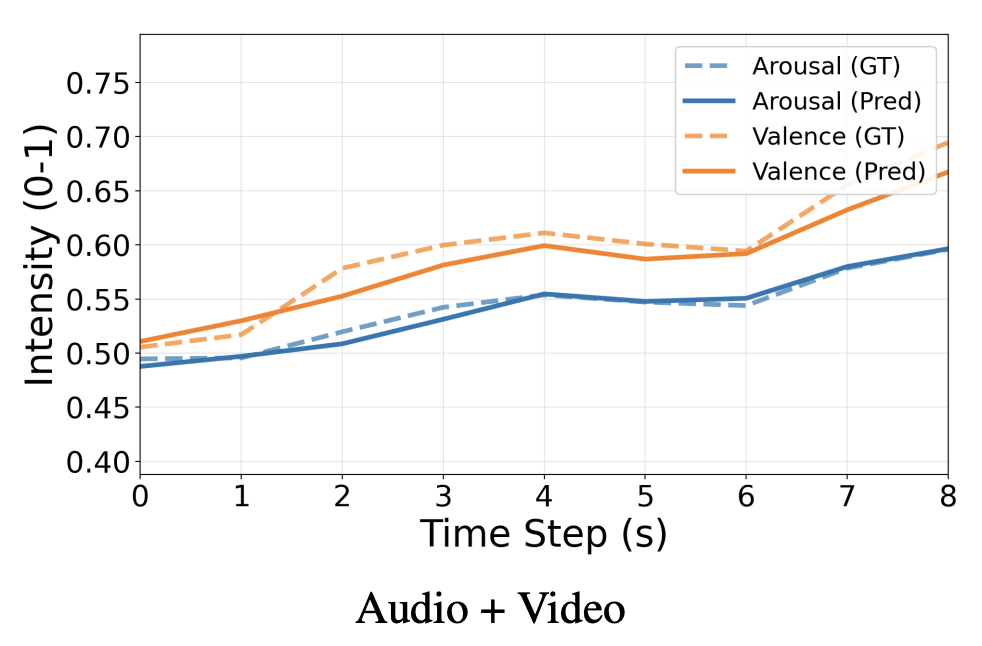

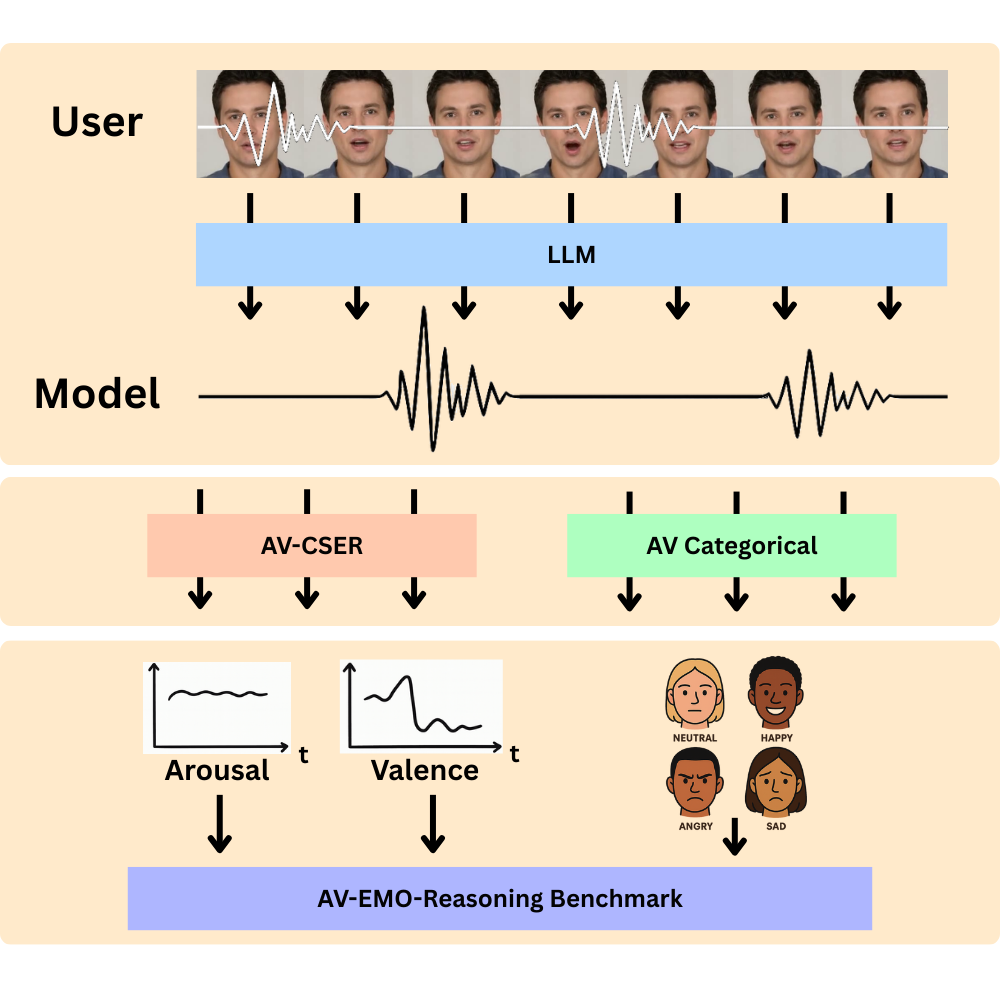

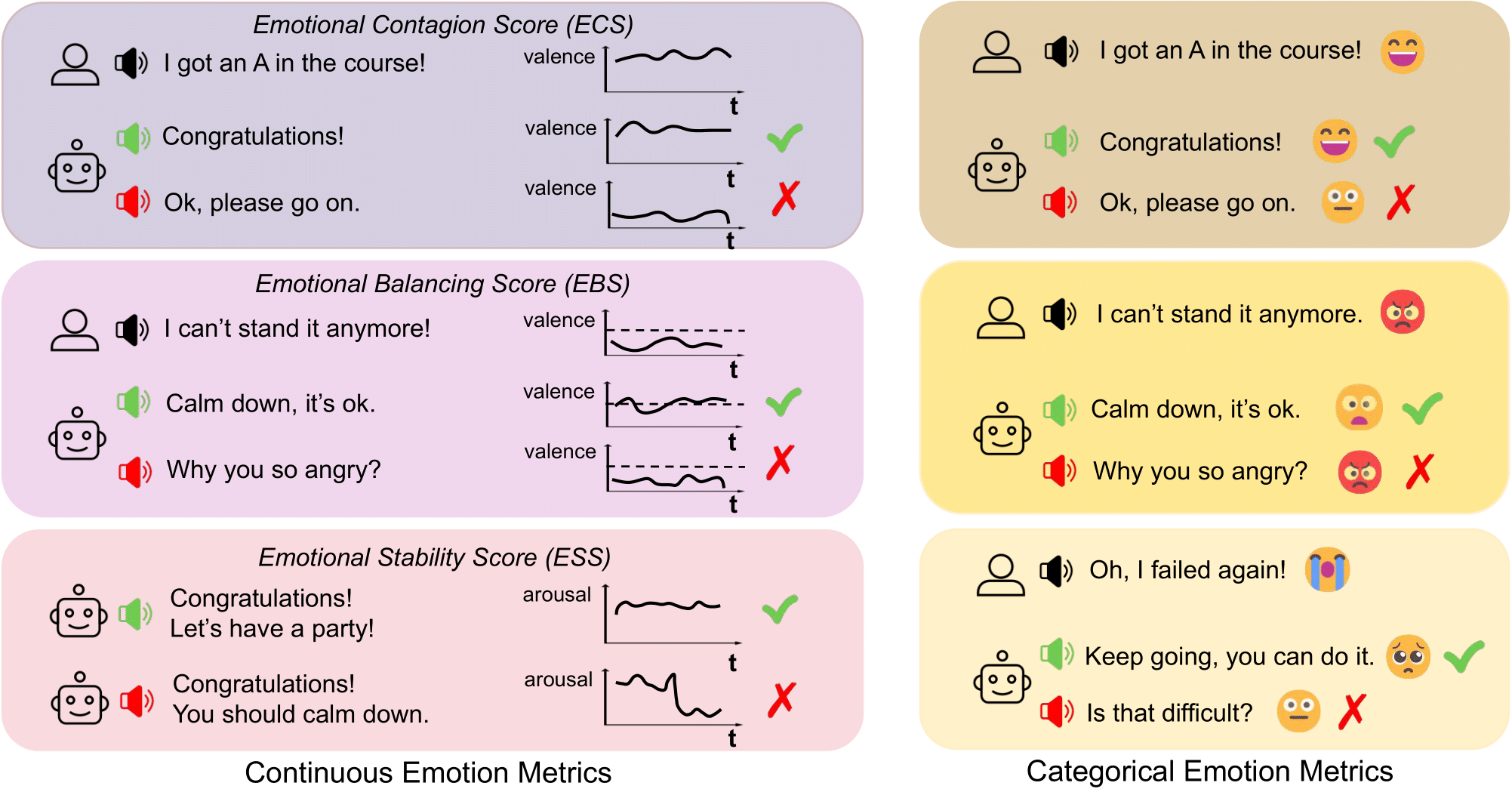

AV-EMO-Reasoning: Benchmarking Emotional Reasoning Capabilities in Omni-modal LLMS with Audio-visual Cues

Krish Patel*, Dingkun Zhou*, Ajay Kankipati, Akshaj Gupta, Zeyi Austin Li, Mohul Shukla, Vibhor Narang, Sara Kofman, Zongli Ye, Grace Wang, Xiaoyu Shi, Tingle Li, Guan-Ting Lin, Kan Jen Cheng, Huang-Cheng Chou, Jiachen Lian, Gopala Anumanchipalli ICASSP, under review project page / arXiv A holistic benchmark for assessing emotional coherence in omni-modal LLMs through continuous, categorical, and perceptual metrics. |

|

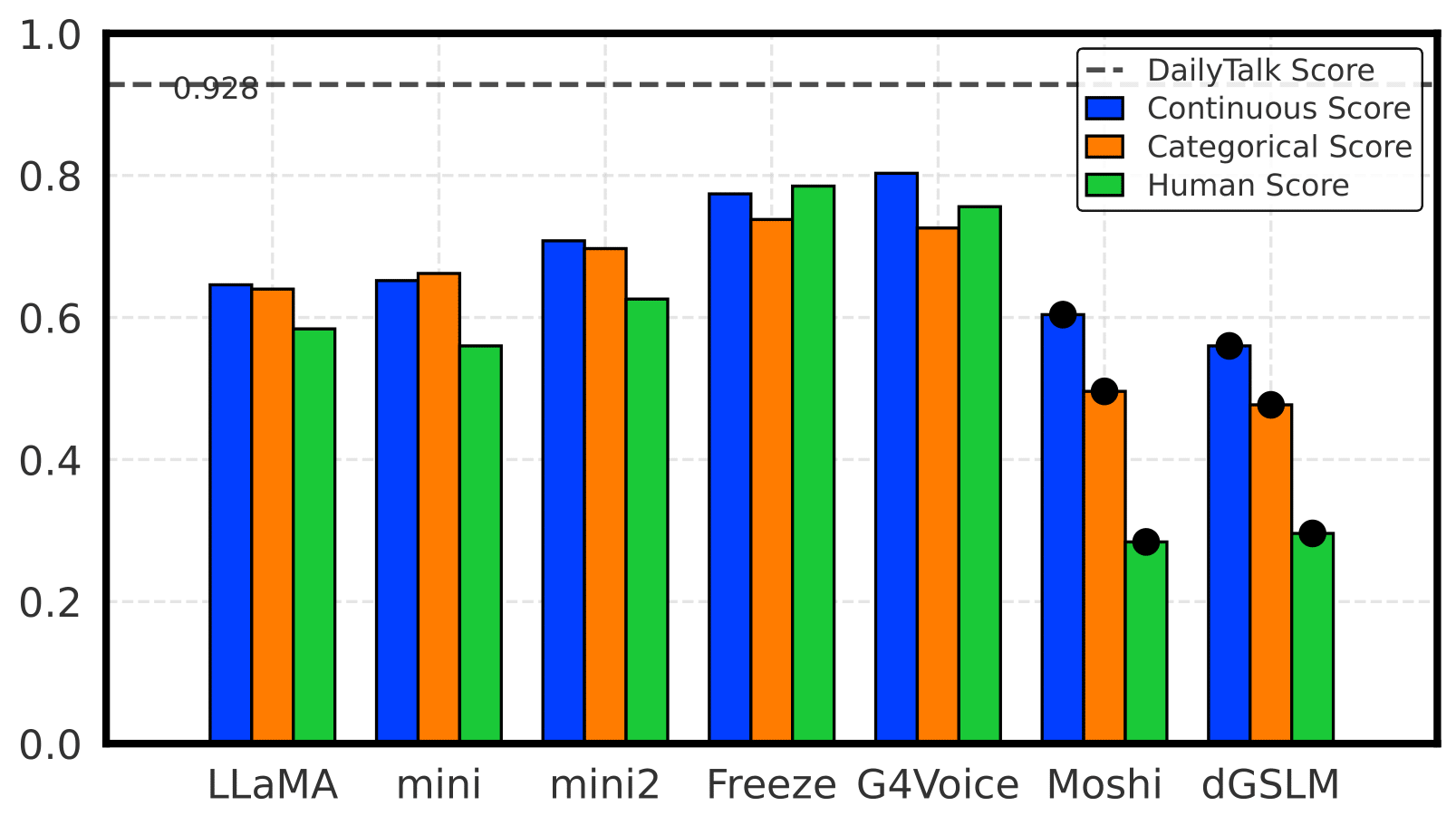

EMO-Reasoning: Benchmarking Emotional Reasoning Capabilities in Spoken Dialogue Systems

Jingwen Liu*, Kan Jen Cheng*, Jiachen Lian, Akshay Anand, Rishi Jain, Faith Qiao, Robin Netzorg, Huang-Cheng Chou, Tingle Li, Guan-Ting Lin, Gopala Anumanchipalli ASRU, 2025 project page / arXiv A holistic benchmark for assessing emotional coherence in spoken dialogue systems through continuous, categorical, and perceptual metrics. |

|

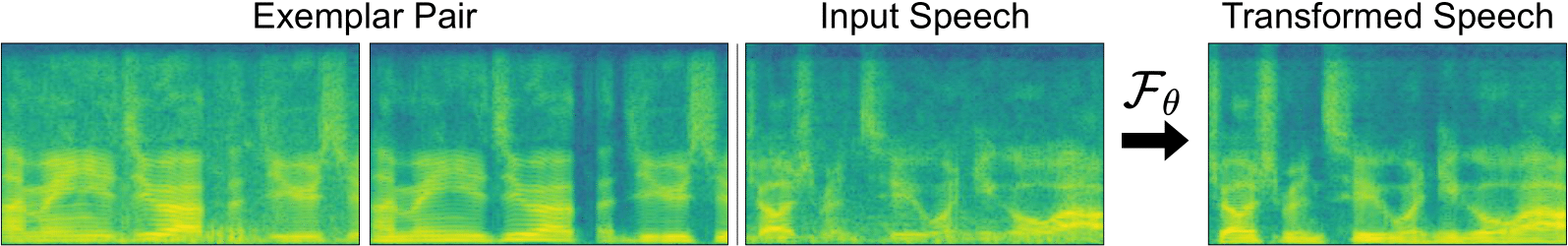

Audio Texture Manipulation by Exemplar-Based Analogy

Kan Jen Cheng*, Tingle Li*, Gopala Anumanchipalli ICASSP, 2025 project page / arXiv An exemplar-based analogy (in-context learning) model for audio texture manipulation that uses paired speech examples to learn transformations. |

|

Last updated: Template from Jon Barron. |